Search is evolving. Again. With Google’s new AI Mode, you don’t just type a query and scroll through a list of links. Now, you can snap a photo, speak a question, or even upload a video – and Google’s AI will understand what you mean, follow your train of thought, and respond as if you're having a conversation. And yes, it actually works.

Google has begun expanding access to AI Mode, its most advanced AI Search experience, first introduced to Google One AI Premium subscribers and now rolling out to more users via Search Labs. This version goes well beyond the AI Overviews many people are already seeing. What sets it apart is its multimodal capability – the ability to understand not just words, but images, voice, and context.

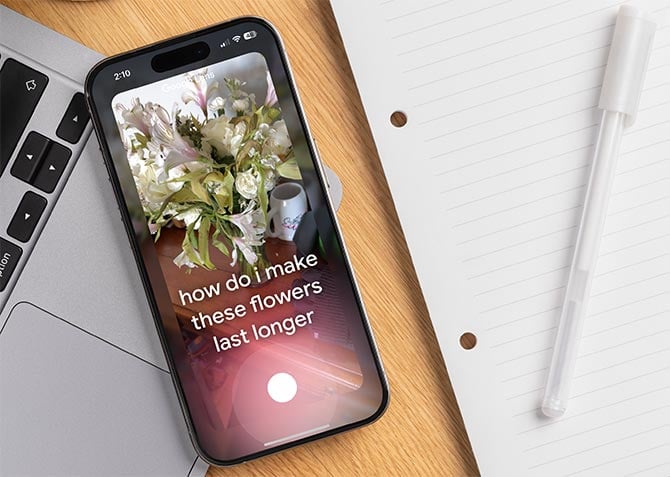

That last part is key. AI Mode isn’t just multimodal; it’s conversational. I recently tested it by snapping a photo of some cut flowers that were starting to droop. I asked how to make them last longer. In response, Google gave a thoughtful, practical answer: remove leaves below the waterline, add flower food (sugar with a bit of vinegar), and trim the stems at a 45-degree angle. Curious, I followed up – “Does the 45-degree angle really make a difference?” – and it knew exactly what I meant. No need to rehash the full question. The system kept up with me, like a good assistant would.

This kind of back-and-forth is what makes AI Mode feel less like using a tool and more like talking to someone who actually understands you. It encourages you to explore, refine your thinking, and get more specific with each question. That’s something traditional search simply doesn’t do.

Read more: AI Chatbots 101: How to Get the Best Results from AI Assistants

Under the hood, AI Mode runs on a custom version of Gemini 2.0, designed to handle open-ended, nuanced questions. It uses a technique Google calls “query fan-out,” where it breaks down your prompt into multiple sub-queries, issues those simultaneously across different data sources (including the Knowledge Graph, shopping data, and real-time web content), and synthesizes the findings into a single, coherent response. Think of it as doing 20 searches at once, then summarizing the highlights so you don’t have to.

Multimodal functionality builds on Google Lens, but with a major upgrade. AI Mode doesn’t just recognize what’s in an image – it understands the full scene. It knows the difference between a wilted tulip and a healthy one, or how the position of books on a shelf might signal which ones you’ve already read. It’s subtle, but meaningful.

Read more: New Google Shopping Tools Make It Easier to Find Exactly What You Want

As with any experimental product, it’s not perfect. There will be times when the AI doesn’t quite nail the response or where fallback links feel more like old-school search. But Google’s willingness to open this up for real-world feedback – rather than just internal testing – shows they’re serious about making search better, not just flashier.

From what I’ve seen, this is a genuine step forward. Multimodal AI in Search makes the experience feel more intuitive, more human. It bridges the gap between how we think and how we search.

You can try it now by signing up for Labs in the Google app on Android or iOS. It’s worth checking out – not just to see where search is going, but to experience a more natural way of getting answers to your everyday questions.

[Image credit: Screenshot via Techlicious, iPhone mockup via Canva]